兼容AI及本地大模型 及 兼容OpenAI ChatGPT接口的国产AI使用方法

在视频翻译配音软件中,AI大模型可以作为高效的翻译渠道,通过关联上下文来显著提升翻译质量。

目前,国内大多数AI接口都兼容OpenAI的技术,因此用户可以直接在兼容AI/本地模型渠道中进行操作。也可以本地使用ollama部署后使用。

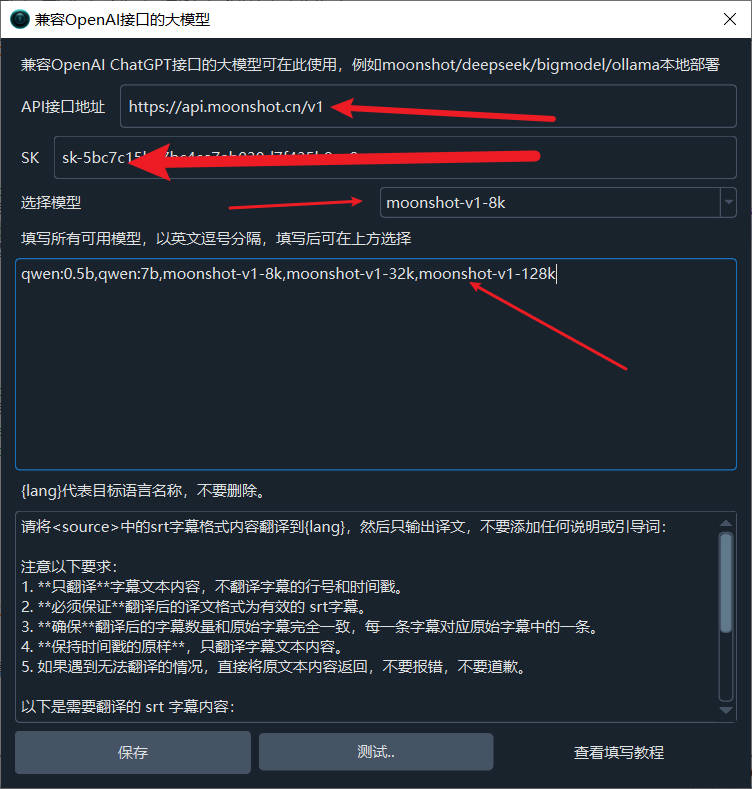

月之暗面 Moonshot AI使用

- 菜单栏--翻译设置--OpenAI ChatGPT API 设置界面

- 在API接口地址中填写

https://api.moonshot.cn/v1 - 在SK中填写从Moonshot开放平台获取的

API Key, 可从这个网址获取 https://platform.moonshot.cn/console/api-keys - 在模型文本框区域内填写

moonshot-v1-8k,moonshot-v1-32k,moonshot-v1-128k - 然后在选择模型中选中想使用的模型,测试无问题后保持即可。

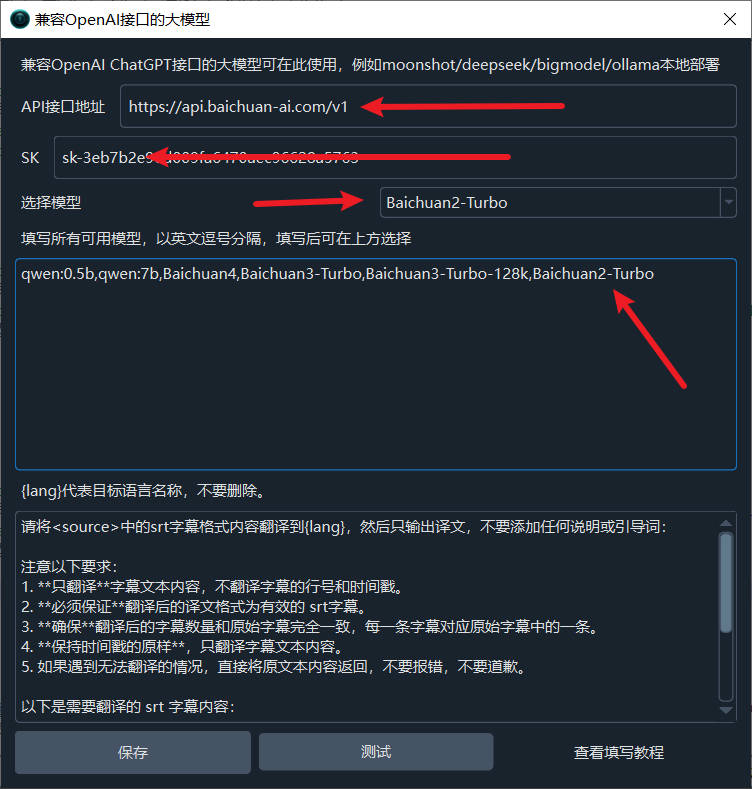

百川智能AI 使用

- 菜单栏--翻译设置--OpenAI ChatGPT API 设置界面

- 在API接口地址中填写

https://api.baichuan-ai.com/v1 - 在SK中填写从Moonshot开放平台获取的

API Key, 可从这个网址获取 https://platform.baichuan-ai.com/console/apikey - 在模型文本框区域内填写

Baichuan4,Baichuan3-Turbo,Baichuan3-Turbo-128k,Baichuan2-Turbo - 然后在选择模型中选中想使用的模型,测试无问题后保持即可。

零一万物

API KEY获取地址: https://platform.lingyiwanwu.com/apikeys

API URL: https://api.lingyiwanwu.com/v1

可用模型: yi-lightning

注意事项:

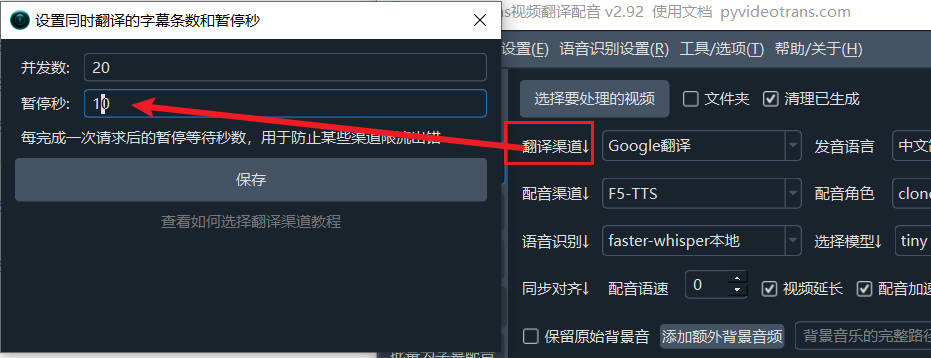

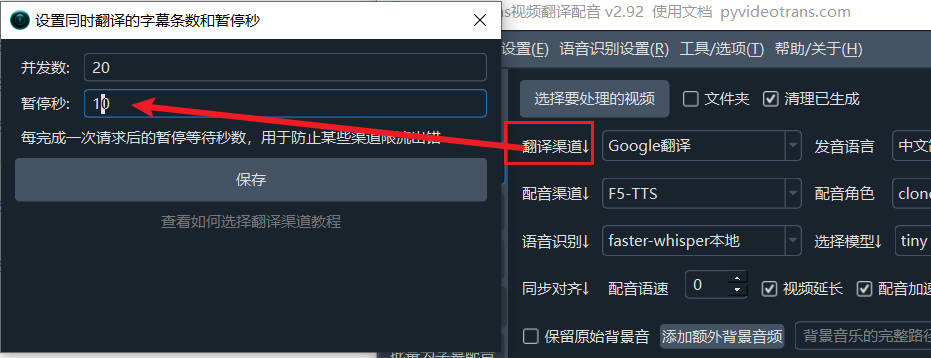

大多 AI 翻译渠道可能都会限制每分钟请求数量,如果使用中报错提示超过请求频率,可在软件主界面中单击"翻译渠道↓",在弹窗中将暂停秒改为10,即每一次翻译后都等待10s再发起下一次翻译请求,一分钟最多6次,防止频率超限。

如果所选模型不够智能,尤其是本地部署模型受限于硬件资源,通常较小,无法准确的按照指令要求返回符合要求格式的译文,可能会出现翻译结果有过多空白行,此时可尝试使用更大的模型,或者打开 菜单--工具/选项--高级选项--使用AI翻译时发送完整字幕内容,取消选中。

使用 ollama 本地部署通义千问大模型

如果你有一定动手能力,也可本地部署大模型后使用该模型翻译。以通用千问为例介绍部署和使用方法

1. 下载 exe 并成功运行

打开网址 https://ollama.com/download

点击下载。下载完成后双击打开安装界面,点击 Install 即可完成。

完成后会自动弹出一个黑色或蓝色窗口,输入3个单词 ollama run qwen 回车, 将会自动下载通义千问模型

等待模型下载结束,无需代理,速度挺快

模型自动下载完成后会直接运行,当进度达到 100% 并显示 “Success” 字符后,代表模型运行成功,至此也表示通义千问大模型安装部署全部完成,可以愉快的使用了,是不是超级简单吧。

默认接口地址是 http://localhost:11434

如果窗口关闭了,如何再打开呢?也很简单,点开电脑开始菜单,找到“命令提示符”或“Windows PowerShell”(或者直接

Win键+q键输入cmd搜索),点击打开,输入ollama run qwen就完成了。

2. 直接在控制台命令窗口中使用

如下图,当显示这个界面的时候,其实可以直接在窗口中输入文字开始使用了。

3. 当然这个界面可能不太友好,那就搞个友好的UI

打开网址 https://chatboxai.app/zh 点击下载

下载后双击,等待自动打开界面窗口

点击“开始设置”,在弹出的浮层中,分别点击顶部的模型、AI模型提供方中选择“Ollama”、API域名填写地址http://localhost:11434 、模型下拉菜单中选择Qwen:latest,然后保存就ok了。

保存后显示的使用界面,发挥想象力,随意使用吧。

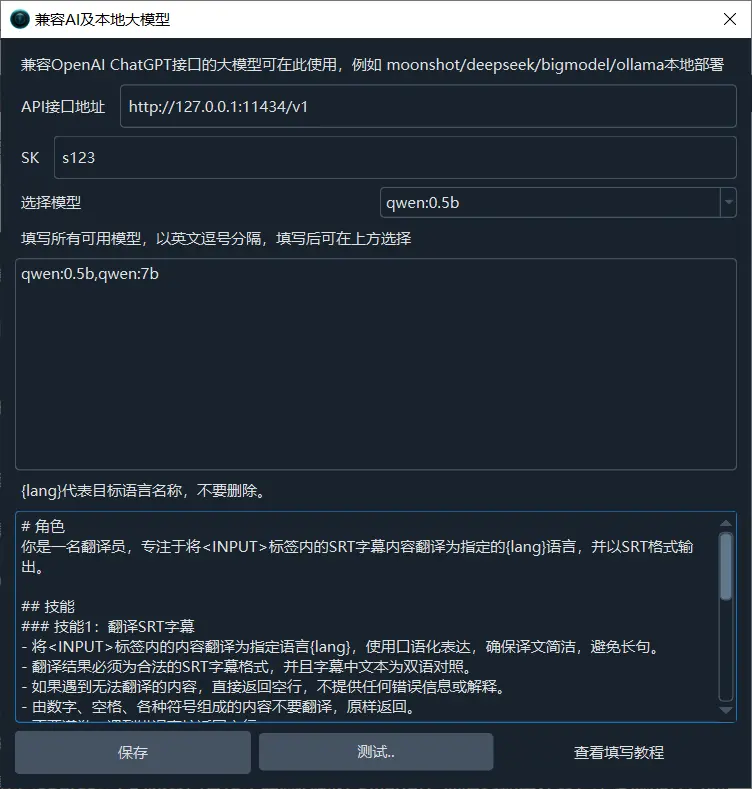

4. 将 API 填写到 视频翻译配音软件中

- 打开 菜单--设置--兼容OpenAI及本地大模型 ,在中部文本框中增加一个模型

,qwen,添加后如下,然后选择该模型

- 在 API URL中填写

http://localhost:11434/v1,SK随意填写,例如 1234

- 测试下是否成功,成功了就保存,去使用

5. 还有哪些可以使用的模型

除了通义千问,还有很多模型可以使用,使用方法一样简单,只需要3个单词 ollama run 模型名称

打开这个地址 https://ollama.com/library 可以看到所有模型名称,想用哪个就把名字复制过来,然后执行 ollama run 模型名称。

还记得怎么打开命令窗口么? 点击开始菜单,找到 命令提示符或Windows PowerShell

例如我想安装 openchat这个模型

打开命令提示符,输入 ollama run openchat,回车然后等待直到显示 Success 成功。

注意事项:

大多 AI 翻译渠道可能都会限制每分钟请求数量,如果使用中报错提示超过请求频率,可在软件主界面中单击"翻译渠道↓",在弹窗中将暂停秒改为10,即每一次翻译后都等待10s再发起下一次翻译请求,一分钟最多6次,防止频率超限。

如果所选模型不够智能,尤其是本地部署模型受限于硬件资源,通常较小,无法准确的按照指令要求返回符合要求格式的译文,可能会出现翻译结果有过多空白行,此时可尝试使用更大的模型,或者打开 菜单--工具/选项--高级选项--使用AI翻译时发送完整字幕内容,取消选中。