Parakeet-API: 高性能本地语音转录服务

parakeet-api 项目 是一个基于 NVIDIA Parakeet-tdt-0.6b 模型的本地语音转录服务。它提供了一个与 OpenAI API 兼容的接口和一个简洁的 Web 用户界面,让您能够轻松、快速地将任何音视频文件转换为高精度的 SRT 字幕,同时可适配pyVideoTrans v3.72+。

Windows整合包下载:

下载地址:https://pan.baidu.com/s/1cCUyLw93hrn40eFP_qV6Dw?pwd=1234

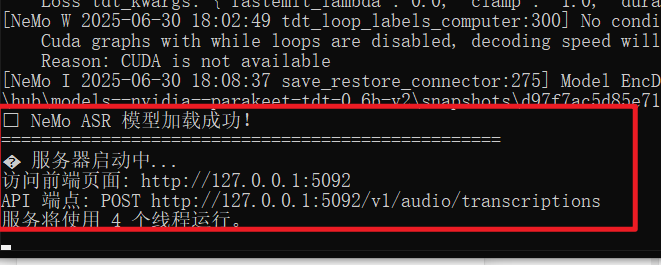

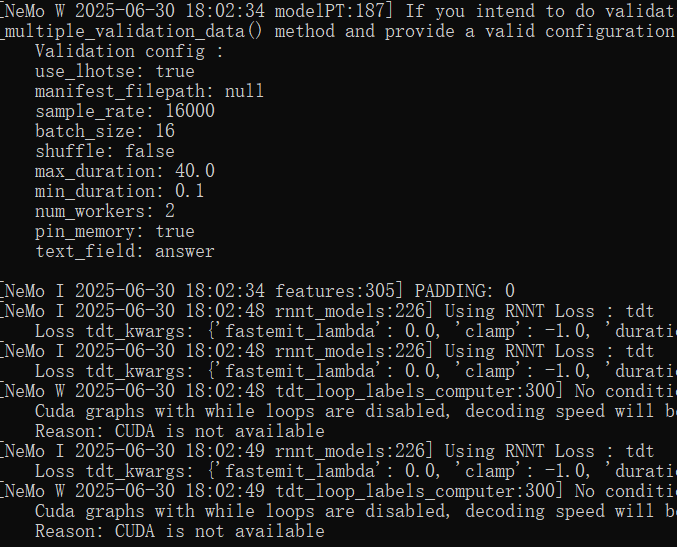

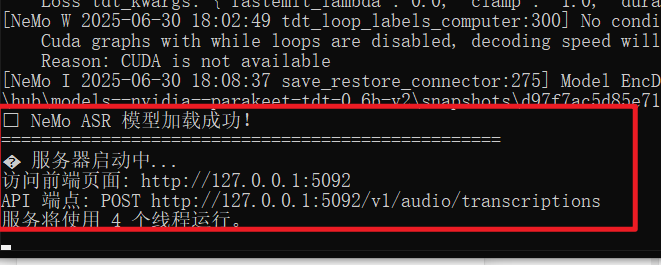

使用方法:解压后,双击启动.bat,等待显示如下界面,并自动打开浏览器,即启动成功 启动成功界面

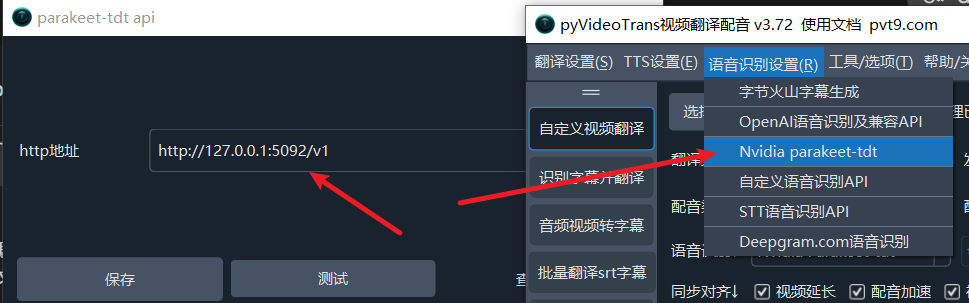

在 pyVideoTrans 中使用

Parakeet-API 可与视频翻译工具 pyVideoTrans (v3.72及以上版本) 无缝集成。

- 确保您的

parakeet-api服务正在本地运行。 - 打开

pyVideoTrans软件。 - 在菜单栏中,选择 语音识别(R) -> Nvidia parakeet-tdt。

- 在弹出的配置窗口中,将 "http地址" 设置为:

http://127.0.0.1:5092/v1 - 点击 "保存",即可开始使用。

源码部署方式

🛠️ 安装与配置指南

本项目支持 Windows, macOS 和 Linux。请按照以下步骤进行安装和配置。

步骤 0: 配置 python3.10 环境

如果你本机无python3,请照此教程安装: https://pvt9.com/_posts/pythoninstall

步骤 1: 准备 FFmpeg

本项目使用 ffmpeg 进行音视频格式预处理。

Windows (推荐):

- 从 FFmpeg github 仓库下载 解压后得到

ffmpeg.exe。 - 将下载的

ffmpeg.exe文件直接放置在本项目根目录 (与app.py文件在同一级),程序会自动检测并使用它,无需配置环境变量。

- 从 FFmpeg github 仓库下载 解压后得到

macOS (使用 Homebrew):

bashbrew install ffmpegLinux (Debian/Ubuntu):

bashsudo apt update && sudo apt install ffmpeg

步骤 2: 创建 Python 虚拟环境并安装依赖

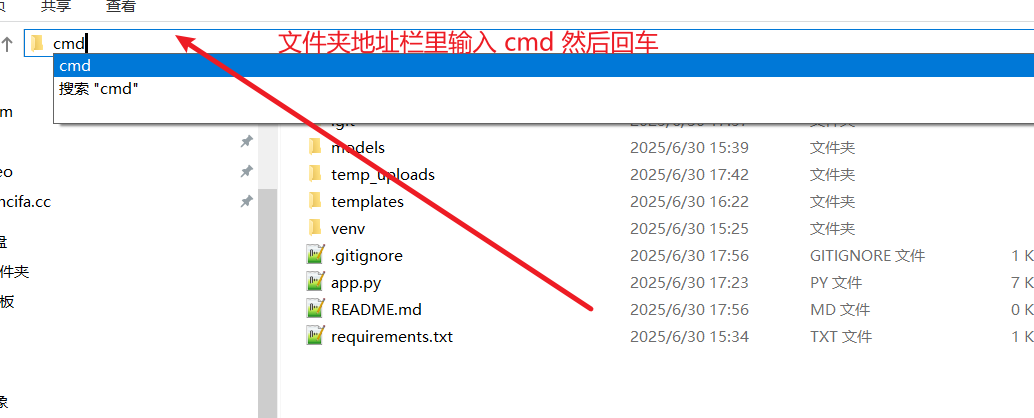

下载或克隆本项目代码到您的本地计算机(建议放在非系统盘的英文或数字文件夹内)。

打开终端或命令行工具,并进入项目根目录(windows上直接在文件夹地址栏里输入

cmd回车即可)。

创建虚拟环境:

python -m venv venv激活虚拟环境:

- Windows (CMD/PowerShell):

.\venv\Scripts\activate - macOS / Linux (Bash/Zsh):

source venv/bin/activate

- Windows (CMD/PowerShell):

安装依赖库:

如果您没有 NVIDIA 显卡 (仅使用 CPU):

bashpip install -r requirements.txt如果您有 NVIDIA 显卡 (使用 GPU 加速): a. 确保您已安装最新的 NVIDIA 驱动 和相应的 CUDA Toolkit。 b. 卸载可能存在的旧版 PyTorch:

pip uninstall -y torchc. 安装与您的 CUDA 版本匹配的 PyTorch (以 CUDA 12.6 为例):bashpip install torch --index-url https://download.pytorch.org/whl/cu126

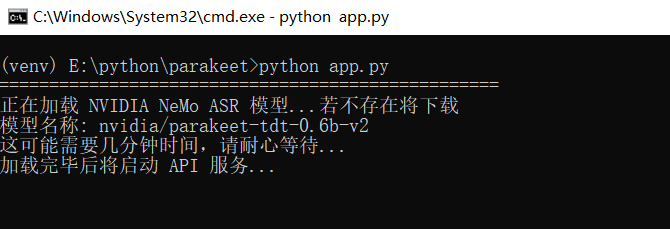

步骤 3: 启动服务

在已激活虚拟环境的终端中,运行以下命令:

python app.py您将看到服务启动的提示。首次运行会下载模型(约1.2GB),请耐心等待。

如果出现一堆提示,无需介意,

启动成功界面

🚀 使用方法

方法 1: 使用 Web 界面

- 在浏览器中打开:http://127.0.0.1:5092

- 拖拽或点击上传您的音视频文件。

- 点击 "开始转录",等待处理完成即可在下方看到并下载 SRT 字幕。

方法 2: API 调用 (Python 示例)

使用 openai 库可以轻松调用本服务。

from openai import OpenAI

client = OpenAI(

base_url="http://127.0.0.1:5092/v1",

api_key="any-key",

)

with open("your_audio.mp3", "rb") as audio_file:

srt_result = client.audio.transcriptions.create(

model="parakeet",

file=audio_file,

response_format="srt"

)

print(srt_result)