Python GUI Application Startup Optimization: A Deep Dive from 3 Minutes to "Instant Launch"

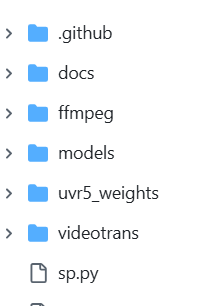

In my spare time, I maintain a video translation software (pyVideoTrans). Initially just a small tool with all code in a single file, I later rewrote the interface using PySide6 and split the code into multiple modules. This "wild growth" approach eventually took its toll—the application's cold startup time reached an unbearable two to three minutes.

So, I spent several weekends embarking on a challenging performance optimization journey. Ultimately, the cold startup time was reduced to around 10 seconds.

This article is a complete review of that journey, delving into code details, exploring the root causes behind each performance bottleneck, and sharing the optimization ideas that revived the application.

1. The Beginning: Using Data to Pinpoint the Problem

When facing performance issues, the worst approach is to guess based on intuition. Your gut might say, "The AI library loads slowly," but which specific library? At which step? How long does it take? These questions require precise data to answer.

My toolkit was simple, with only two tools:

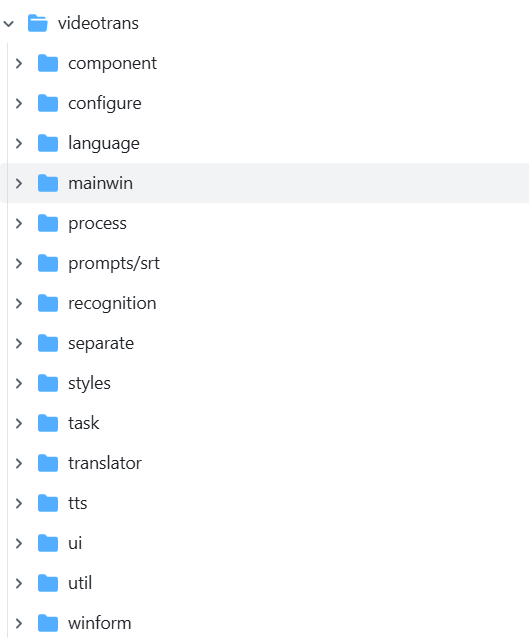

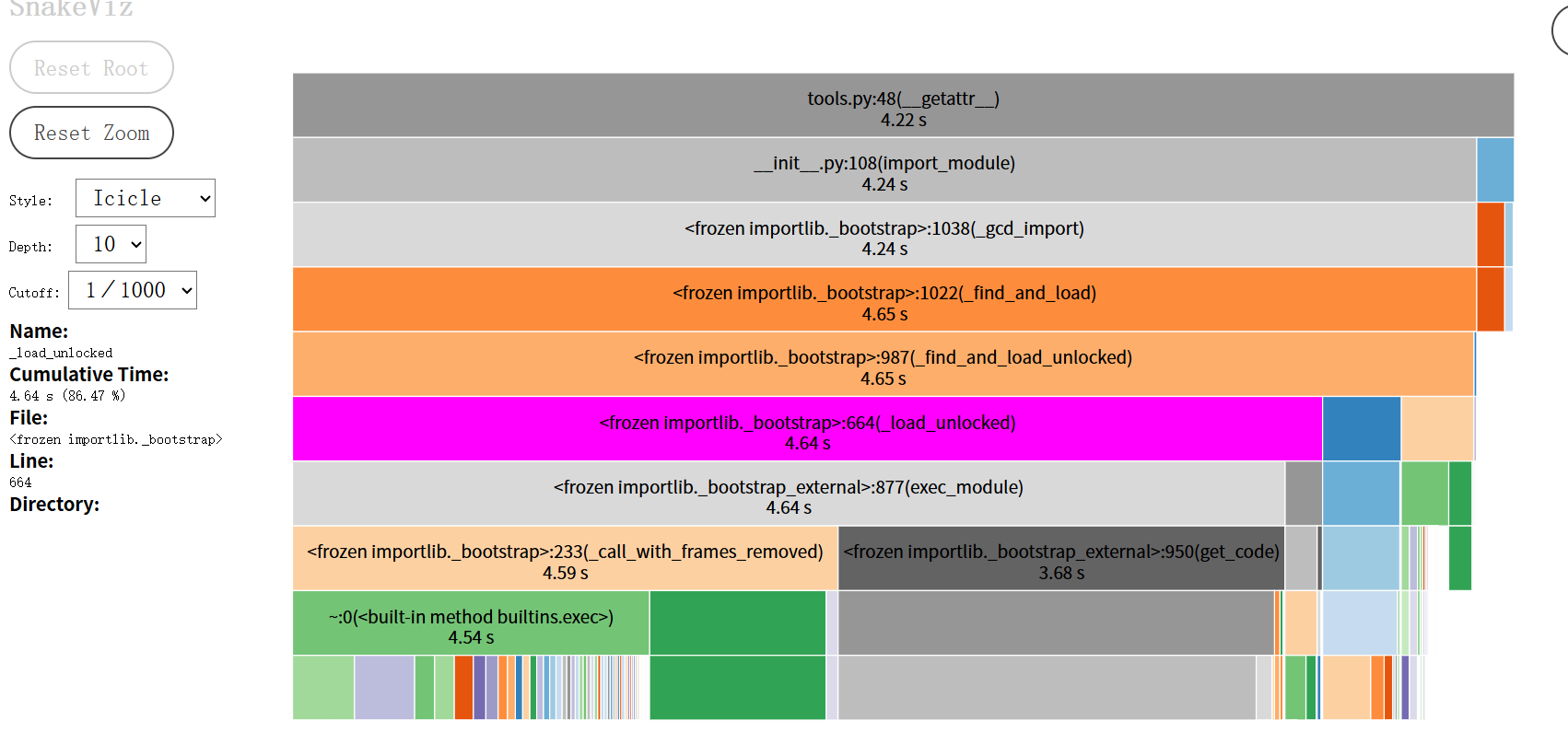

cProfile: Python's built-in performance profiler. It records the number of calls and execution time of all functions during program runtime.snakeviz: A tool that visualizescProfileoutput. Its "flame graph" is a treasure map for performance analysis. Install viapip install snakeviz.

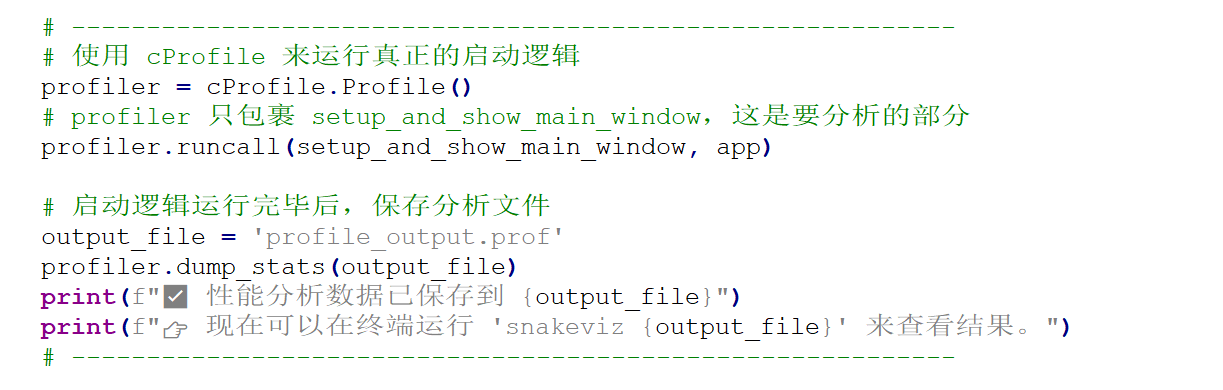

I wrapped the entire startup logic of the application with cProfile, then opened the generated performance data file with snakeviz. A spectacular flame graph appeared before my eyes.

How to Read the Flame Graph?

- Horizontal axis represents time: The wider a block, the more time it consumes.

- Vertical axis represents the call stack: Lower functions call upper functions.

- What to look for: The wide, flat "plateaus" at the top. These are the culprits consuming significant time.

Sure enough, the flame graph clearly showed that most of the time was spent in the import phase. This pointed me to the first and most important direction for optimization: Controlling module loading.

2. The Optimization Journey: A Practice in "Laziness"

First Stop: Initial Success — Cutting the "Eager" Import Chain

The first clue from the flame graph pointed to from videotrans import winform. This seemingly innocent import took over 80 seconds.

1. Problem Code

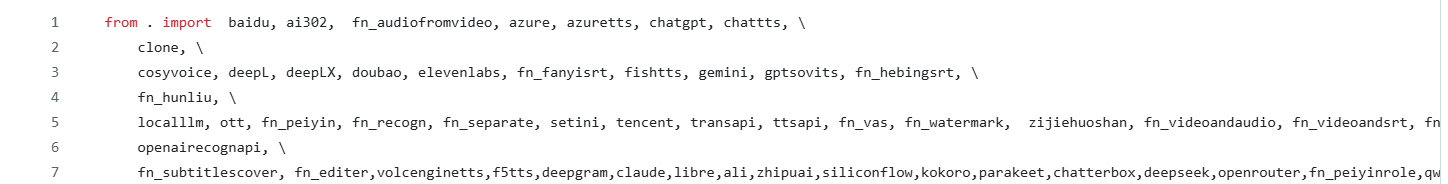

The content of videotrans/winform/__init__.py was very straightforward:

This file defined all modules related to pop-up windows.

2. Analysis: What is "Eager Import"?

This line is a classic example of eager import. Its behavior is "I want it all." When the Python interpreter executes import videotrans.winform, it immediately and unconditionally loads all modules listed in __init__.py (baidu.py, azure.py, etc.) into memory.

This creates a domino effect:

import winformis the first domino.- It triggers dozens of others (

baidu,azure...). - Many of these submodules depend on heavy AI libraries like

torchormodelscope. When imported, these AI libraries perform complex initialization, check hardware, load underlying libraries, etc.

The result: I just wanted to launch a main window, but I was forced to wait for all background dependencies of potentially useful (or never-used) functional windows to load. That 80-second delay was the price of this "eagerness."

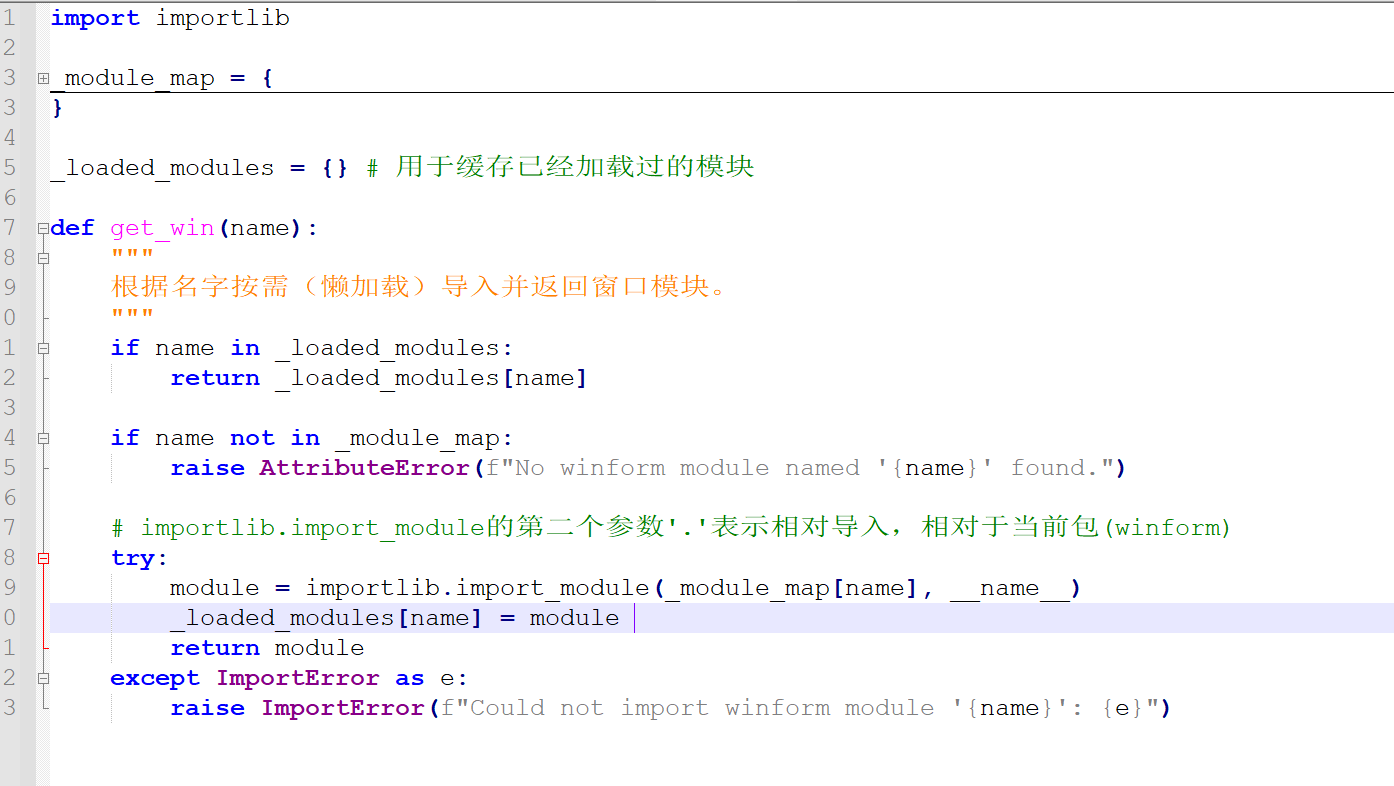

3. Solution: Switch to "Lazy Loading" Mode

The core idea of optimization is simple: Switch from "I want it all" to "I'll give it when you need it" lazy loading.

I refactored videotrans/winform/__init__.py, turning it from a "warehouse manager" into a lightweight "front desk."

Now, import videotrans.winform only executes this minimal __init__.py file, which doesn't depend on any heavy libraries and completes instantly.

The actual import operations are encapsulated inside the get_win function. So, when is get_win called? The answer: When the user actually needs it.

I modified the signal connections for menu items in the main window using lambda:

# Old code: self.actionazure_key.triggered.connect(winform.azure.openwin)

# New code:

self.actionazure_key.triggered.connect(lambda: winform.get_win('azure').openwin())The role of lambda here is crucial. It creates a tiny anonymous function but does not execute it immediately. Only when the user clicks the menu and the triggered signal is emitted does this lambda function body get called. At that point, winform.get_win('azure') is executed, perfectly delaying the loading time from program startup to user interaction.

This optimization had an immediate effect, reducing startup time by over 80 seconds.

Second Stop: Purifying the Polluted "Blueprint" — Complete Decoupling of UI and Logic

Startup was much faster, but creating the main window still took over 20 seconds. Using simple "timing with print statements," I found the problem was at from videotrans.ui.en import Ui_MainWindow.

1. Problem Code

The Ui_MainWindow class is generated by the pyside6-uic tool from a .ui file. It should only contain pure interface layout code, like an architectural blueprint. But inspecting ui/en.py, I found something that shouldn't be there:

# Old ui/en.py

from videotrans.configure import config

from videotrans.recognition import RECOGN_NAME_LIST

from videotrans.tts import TTS_NAME_LIST

class Ui_MainWindow(object):

def setupUi(self, MainWindow):

# ...

self.tts_type.addItems(TTS_NAME_LIST) # The blueprint shouldn't have specific construction materials

# ...2. Analysis: Principle of Separation of Concerns

This is a classic violation of the separation of concerns principle.

- The UI file's responsibility should only be to describe "what the interface looks like."

- The logic file's responsibility is to fetch data and decide how to display it on the interface.

My "architectural blueprint" (ui/en.py) not only drew the structure but also went to the "construction market" (import config, import tts) to bring back "cement and bricks" (TTS_NAME_LIST). This meant anyone wanting to look at the blueprint had to bring the entire construction market home first.

3. Solution: Let the Blueprint Return to Purity

The core of optimization is to let each module do only its own job.

Purify the UI File: I drastically removed all non-PySide6

imports and all code setting text or populating data fromui/en.py. This turned it back into a pure "UI skeleton" responsible only for layout, and its loading speed returned to milliseconds.Return Logic to the Main Window: In my main window logic class

MainWindow(_main_win.py), I nowimportthose business modules. The execution order of the__init__method is strictly controlled:

# _main_win.py

from videotrans.ui.en import Ui_MainWindow # This step is now very fast

from videotrans.configure import config # Business logic is imported here

from videotrans.tts import TTS_NAME_LIST

class MainWindow(QMainWindow, Ui_MainWindow):

def __init__(self):

super().__init__()

# 1. First, use the pure blueprint to build the house frame

self.setupUi(self)

# 2. Then, use cement and bricks (business data) to decorate

self.tts_type.addItems(TTS_NAME_LIST)

# ...This optimization not only improved performance but, more importantly, clarified the code structure, decoupling UI and logic, laying a solid foundation for future maintenance and optimization.

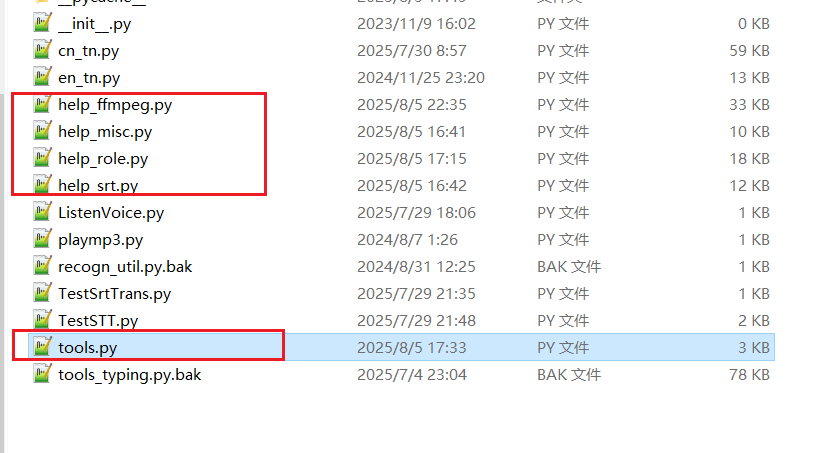

Third Stop: Dismantling the "Universal Toolbox" — Divide and Conquer with Lazy Loading for tools.py

After the first two rounds of optimization, startup speed had improved dramatically. But import videotrans.util.tools still took 6 seconds. tools.py was an 80KB "hodgepodge" file containing dozens of functions with various purposes, from getting role lists to setting network proxies.

1. Analysis: import Is More Than Just "Loading"

Many people think that if a .py file only contains function definitions, importing it should be fast. This is a common misconception. When Python executes import, it does three main things behind the scenes: reading, parsing, and compiling.

For an 80KB large file, the Python interpreter needs to read line by line, analyze the syntax structure, and then compile it into "bytecode" that the Python virtual machine can execute. This compilation process itself is very time-consuming.

2. Solution: Divide and Conquer, and Use ast for Ultimate Lazy Loading

The optimization direction was clear: Break a large compilation task into multiple smaller ones, and execute them only when needed.

Split: I first split

tools.pyinto multiple smaller files by functionality, such ashelp_role.py,help_ffmpeg.py, etc., placed in the same directory.Smart Aggregation: Then, I turned

tools.pyinto an intelligent "router" using theast(Abstract Syntax Tree) module to implement lazy loading.

# Optimized videotrans/util/tools.py

import os

import ast

import importlib

_function_map = None # Function map, initially empty

def _build_function_map_statically():

# ...

# Only read file text, no execution or compilation

source_code = f.read()

# Parse the text into a data structure (AST)

tree = ast.parse(source_code)

# Traverse this data structure to find all function definition names

for node in tree.body:

if isinstance(node, ast.FunctionDef):

_function_map[node.name] = module_name

# ...

def __getattr__(name):

# Triggered on the first call to tools.xxx

_build_function_map_statically() # Build the function map once

# ... Find the module name from the map, then import that small module ...The application of ast here is the key:

ast.parse()can analyze code as pure text without executing or compiling it, extracting structural information. This process is very fast because it skips the most time-consuming compilation step.- The

_build_function_map_staticallyfunction acts like a fast scout. It only "looks" at allhelp_*.pyfiles, drawing a map of "which function is where," without actually entering any "house" (loading a module). - Only when

tools.some_function()is actually called does__getattr__trigger, preciselyimporting that small file based on the map. The compilation cost is perfectly distributed across the first call of each different function.

After this optimization, the time cost of import tools disappeared.

Fourth Stop: Cutting the Root — Implementing a Proxy for the Global Configuration Module

My config.py was a "disaster area." It not only defined constants but also read and wrote .json configuration files upon import and was used as a global variable modified and read by multiple modules. This top-level I/O operation severely slowed down any module that import config.

Solution: Proxy Pattern and Module Replacement

Since the config module's interface couldn't change, I used an advanced technique: Proxy Pattern.

- Rename the original

config.pyto_config_loader.py(internal implementation). - Create a new

config.pythat is itself a "proxy object."

# New videotrans/configure/config.py

import sys

import importlib

class LazyConfigLoader:

def __init__(self):

object.__setattr__(self, "_config_module", None)

def _load_module_if_needed(self):

# Load _config_loader only on first access

if self._config_module is None:

self._config_module = importlib.import_module("._config_loader", __package__)

def __getattr__(self, name): # Intercept read operations: config.params

self._load_module_if_needed()

return getattr(self._config_module, name)

def __setattr__(self, name, value): # Intercept write operations: config.current_status = "ing"

self._load_module_if_needed()

setattr(self._config_module, name, value)

# Replace the current module with the proxy instance

sys.modules[__name__] = LazyConfigLoader()The brilliance of this solution:

- Module Replacement: The line

sys.modules[__name__] = ...ensures that everywhereimport configis used, they get an instance of thisLazyConfigLoader. - Interception and Forwarding:

__getattr__and__setattr__allow this proxy object to intercept all read and write operations on its attributes. - State Uniqueness: All read and write operations are ultimately forwarded to the same, only-loaded-once

_config_loadermodule. This perfectly ensures thatconfig, as a global state store, has consistent and synchronized data across all modules.

Final Sprint: Making the First Impression Perfect

After the above optimizations, my application logic was very fast. But on startup, there were still a few seconds of white screen before the splash screen appeared belatedly.

The Final Bottleneck: The Weight of PySide6.QtWidgetsimport PySide6.QtWidgets is a very heavy operation. It not only loads Python code but, more importantly, loads many C++ dynamic link libraries that interact with the window system in the background. This is an unavoidable overhead before any window can be displayed.

Solution: Two-Phase Startup Since it can't be avoided, make it happen when the user least expects it.

- Phase One: Display the Splash Screen

- In the entry

main.py, onlyimportthe most core, lightweight PySide6 components, e.g.,from PySide6.QtWidgets import QApplication, QWidget. - Immediately create and

show()a minimal, dependency-free startup windowStartWindow.

- In the entry

- Phase Two: Background Loading

- After

StartWindowis displayed, trigger aninitialize_full_appfunction viaQTimer.singleShot(50, ...). - Inside this function, begin executing all the lazy loading processes we optimized earlier:

import config,import tools, create the main windowMainWindow, etc. - When everything is ready,

show()the main window andclose()the startup window.

- After

# Core logic of main.py

if __name__ == "__main__":

# Phase One: Do the bare minimum

app = QApplication(sys.argv)

splash = StartWindow()

splash.show()

# Schedule Phase Two to execute after the event loop starts

QTimer.singleShot(50, lambda: initialize_full_app(splash, app))

sys.exit(app.exec())This solution provides users with instant feedback. Double-click the icon, and the splash screen appears almost instantly. The user knows the program has responded; all subsequent loading happens under this friendly interface, greatly improving the user experience.

Conclusion

This multi-day performance optimization journey was like a deep "archaeological dig" into the code. It made me deeply understand that good software design isn't just about implementing features but also about continuous attention to structure, performance, and user experience. Looking back on the entire process, I summarize a few insights:

- Data-Driven, Get to the Root: Without performance profiling, all optimization is guesswork.

- Laziness Is a Virtue: At startup, "load on demand" is the highest design principle. Don't prepare anything before the user needs it.

- Understand the Cost of

import: It's not free. Large files and long import chains accumulate significant compilation costs. - Modularity and Single Responsibility: Splitting "hodgepodge" modules is the fundamental way to solve performance and maintainability issues.

- Leverage Language Dynamic Features: Tools like

importlib,ast,__getattr__, though not commonly used, are "Swiss Army knives" that can work miracles in solving complex loading problems.