F5-TTS-api

Project source code: https://github.com/jianchang512/f5-tts-api

This is the API and web UI for the F5-TTS project.

F5-TTS is an advanced text-to-speech system that uses deep learning technology to generate realistic, high-quality human voices. With just a 10-second audio sample, it can clone your voice. F5-TTS accurately reproduces speech and infuses it with rich emotional tones.

Original voice sample of the Queen of Women's Kingdom:

Cloned audio:

Windows Integrated Package (Includes F5-TTS Model and Runtime Environment)

123 Cloud Download: https://www.123684.com/s/03Sxjv-okTJ3

HuggingFace Download: https://huggingface.co/spaces/mortimerme/s4/resolve/main/f5-tts-api-v0.3.7z?download=true

Compatible Systems: Windows 10/11 (Extract and use after download)

Usage Instructions:

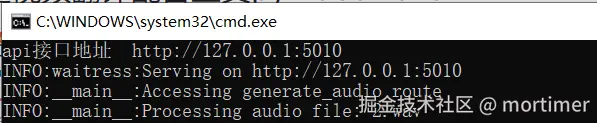

Start API Service: Double-click run-api.bat; the API address is http://127.0.0.1:5010/api.

API service must be started to use it in translation software.

The integrated package uses CUDA 11.8 by default. If you have an NVIDIA GPU with CUDA/cuDNN configured, the system will automatically use GPU acceleration. To use a higher CUDA version, e.g., 12.4, follow these steps:

Navigate to the folder containing

api.py, typecmdin the address bar and press Enter, then run the following commands in the terminal:

.\runtime\python -m pip uninstall -y torch torchaudio

.\runtime\python -m pip install torch torchaudio --index-url https://download.pytorch.org/whl/cu124

F5-TTS excels in efficiency and high-quality voice output. Compared to similar technologies that require longer audio samples, F5-TTS generates high-fidelity speech with minimal audio input and effectively conveys emotions, enhancing the listening experience—a feat many existing technologies struggle to achieve.

Currently, F5-TTS supports both English and Chinese.

Usage Tip: Proxy/VPN

The model needs to be downloaded from huggingface.co. Since this site is inaccessible in some regions, set up a system or global proxy in advance; otherwise, model download will fail.

The integrated package includes most required models, but it may check for updates or download additional small dependency models. If you encounter an

HTTPSConnecterror in the terminal, you still need to set up a system proxy.

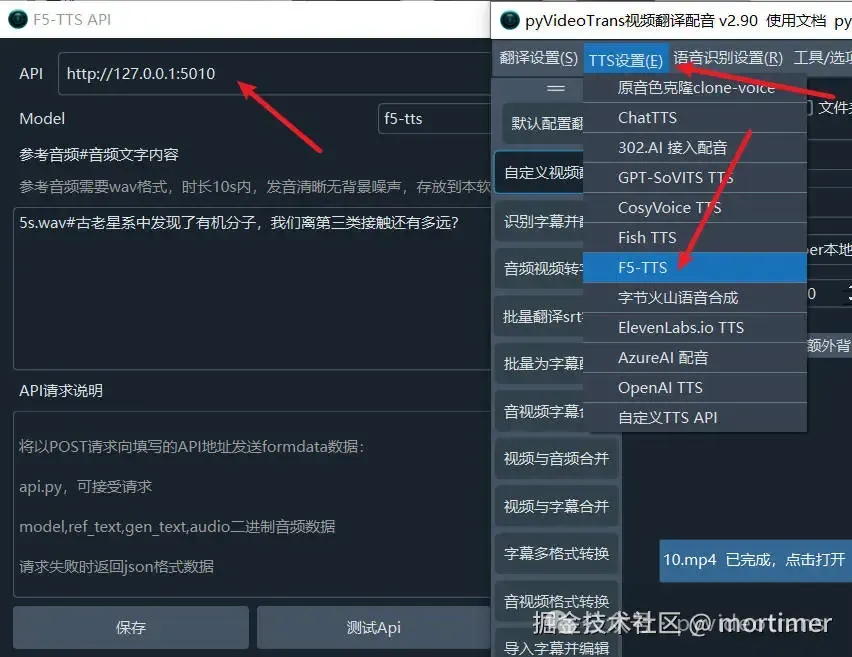

Using in Video Translation Software

Start the API service. API service must be started to use it in translation software.

Open the video translation software, go to TTS settings, select F5-TTS, and enter the API address (default: http://127.0.0.1:5010).

Enter the reference audio and audio text.

It is recommended to select the f5-tts model for better generation quality.

Using api.py in Third-Party Integrated Packages

- Copy

api.pyand theconfigsfolder to the root directory of the third-party integrated package. - Check the path of the integrated

python.exe, e.g., in thepy311folder. In the root directory's address bar, typecmdand press Enter, then run:.\py311\python api.py. If you seemodule flask not found, first run.\py311\python -m pip install waitress flask.

Using api.py After Deploying the Official F5-TTS Project from Source

- Copy

api.pyand theconfigsfolder to the project directory. - Install the required modules:

pip install flask waitress. - Run

python api.py.

API Usage Example

import requests

res = requests.post('http://127.0.0.1:5010/api', data={

"ref_text": 'Enter the text content corresponding to 1.wav here',

"gen_text": '''Enter the text to generate here.''',

"model": 'f5-tts'

}, files={"audio": open('./1.wav', 'rb')})

if res.status_code != 200:

print(res.text)

exit()

with open("ceshi.wav", 'wb') as f:

f.write(res.content)Compatible with OpenAI TTS Interface

The voice parameter must separate the reference audio and its corresponding text with three # symbols, e.g.,

1.wav###你说四大皆空,却为何紧闭双眼,若你睁开眼睛看看我,我不相信你,两眼空空。 This means the reference audio is 1.wav located in the same directory as api.py, and the text in 1.wav is "你说四大皆空,却为何紧闭双眼,若你睁开眼睛看看我,我不相信你,两眼空空."

The returned data is fixed as WAV audio data.

import requests

import json

import os

import base64

import struct

from openai import OpenAI

client = OpenAI(api_key='12314', base_url='http://127.0.0.1:5010/v1')

with client.audio.speech.with_streaming_response.create(

model='f5-tts',

voice='1.wav###你说四大皆空,却为何紧闭双眼,若你睁开眼睛看看我,我不相信你,两眼空空。',

input='你好啊,亲爱的朋友们',

speed=1.0

) as response:

with open('./test.wav', 'wb') as f:

for chunk in response.iter_bytes():

f.write(chunk)