Open-source AI large language models like DeepSeek and Qwen are excellent. With tools like Ollama and LM Studio, you can easily set up a local LLM service and integrate it into various AI applications, such as video translation software.

However, due to the memory limitations of personal computers, locally deployed LLMs are often smaller, such as 1.5B, 7B, 14B, or 32B.

DeepSeek's official online AI service uses the r1 model, which has a staggering 671B parameters. This significant difference means that local models have relatively limited intelligence. You can't use them as freely as online models. Otherwise, you might encounter various strange problems, such as prompts appearing in the translation results, mixing of original and translated text, or even garbled characters.

The root cause is that small models have insufficient intelligence and a weaker ability to understand and execute complex prompts.

Therefore, when using local large language models for video translation, keep the following points in mind to achieve better translation results:

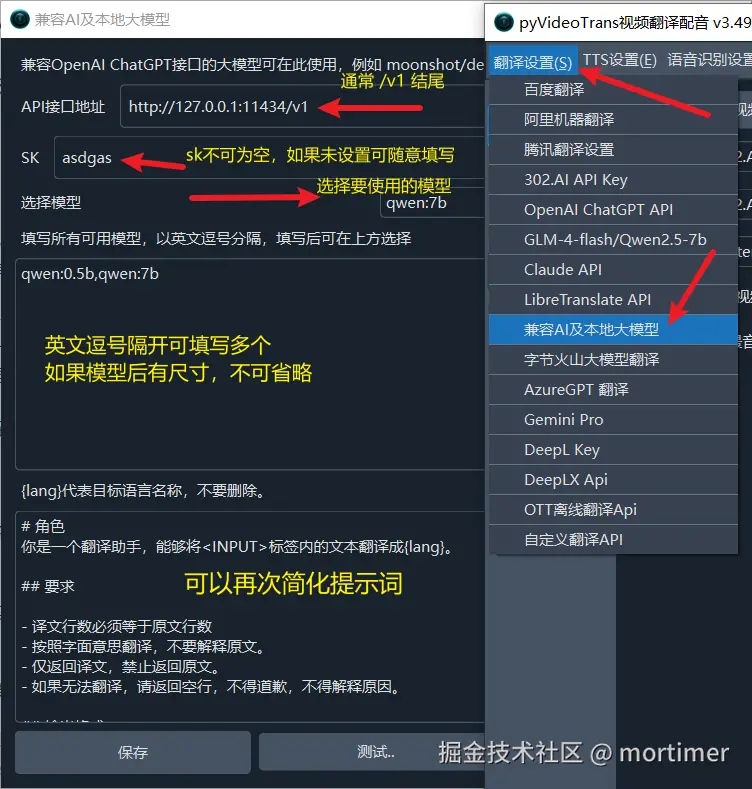

I. Correctly Configure the API Settings of the Video Translation Software

Enter the API address of the locally deployed model into the API Endpoint field under Translation Settings --> Compatible AI & Local LLM in the video translation software. Typically, the API endpoint should end with /v1.

- If your API endpoint requires an API Key, enter it in the SK text box. If not, enter any value, such as

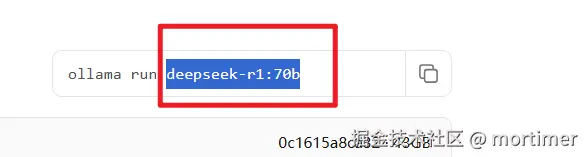

1234, but do not leave it blank. - Enter the model name in the Available Models text box. Note: Some model names may include size information, such as

deepseek-r1:8b. The:8bat the end should also be included.

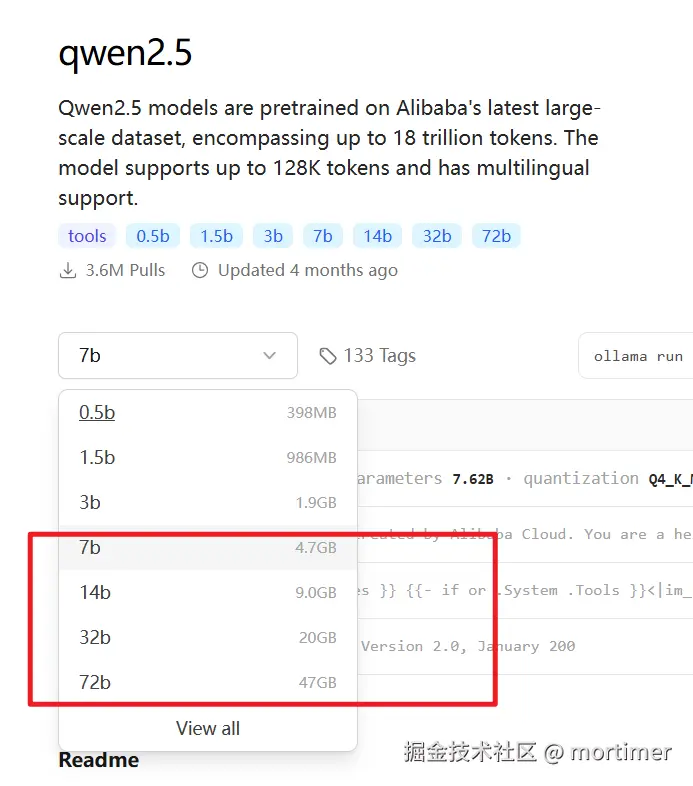

II. Prioritize Larger and More Up-to-Date Models

- It is recommended to choose a model with at least 7B parameters. If possible, choose a model larger than 14B. Of course, the larger the model, the better the results, provided your computer can handle it.

- If using the Qwen (通义千问) series of models, prioritize the qwen2.5 series over the 1.5 or 2.0 series.

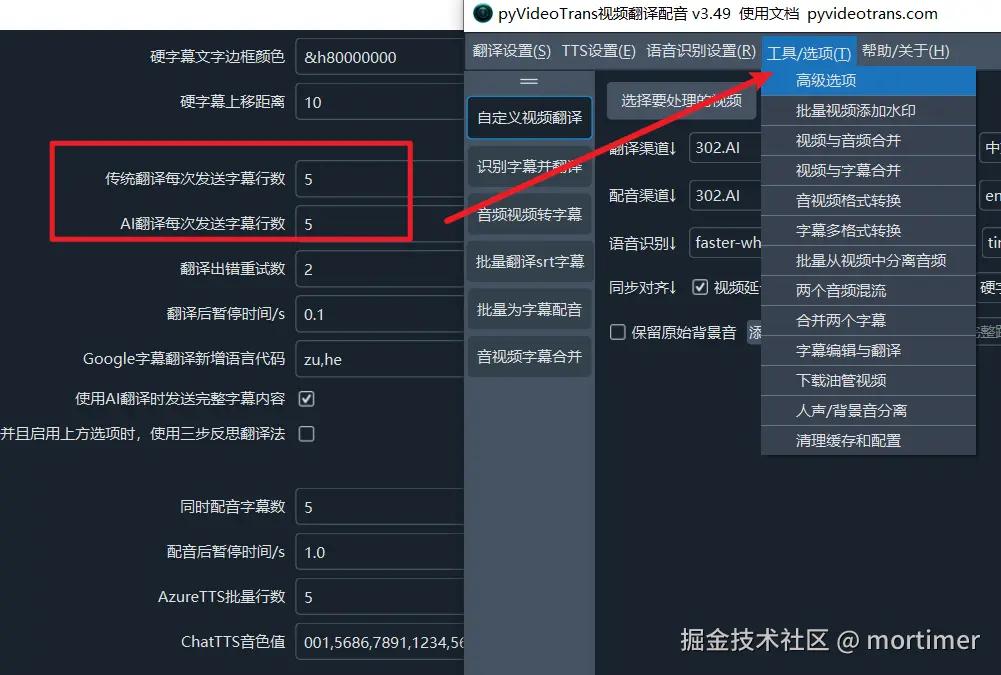

III. Uncheck the "Send Complete Subtitle" Option in the Video Translation Software

Unless the model you deploy is greater than or equal to 70B, checking "Send Complete Subtitle" may cause errors in the subtitle translation results.

IV. Reasonably Set the Number of Subtitle Lines Parameter

Set both the Traditional Translation Subtitle Lines and AI Translation Subtitle Lines in the video translation software to smaller values, such as 1, 5, or 10. This avoids excessive blank lines and improves translation reliability.

The smaller the value, the lower the probability of translation errors, but the translation quality will also decrease; the larger the value, the better the translation quality when there are no errors, but it is also more prone to errors.

V. Simplify the Prompt

When the model is small, it may not be able to understand or follow instructions well. At this time, you can simplify the prompt to make it simple and clear.

For example, the prompt in the default Software Directory/videotrans/localllm.txt file may be complex. If you find that the translation results are not satisfactory, you can try to simplify it.

Simplified Example 1:

# Role

You are a translation assistant that can translate the text within the <INPUT> tags into {lang}.

## Requirements

- The number of lines in the translation must be equal to the number of lines in the original text.

- Translate literally, do not interpret the original text.

- Only return the translation, do not return the original text.

- If you cannot translate, return a blank line, do not apologize, and do not explain the reason.

## Output Format:

Output the translation directly, do not output any other prompts, such as explanations or guide characters.

<INPUT></INPUT>

Translation Result:Simplified Example 2:

You are a translation assistant. Translate the following text into {lang}, keep the number of lines unchanged, only return the translation, and return a blank line if you cannot translate.

Text to be translated:

<INPUT></INPUT>

Translation Result:Simplified Example 3:

Translate the following text into {lang}, keeping the number of lines consistent. Leave blank if you cannot translate.

<INPUT></INPUT>

Translation Result:You can further simplify and optimize the prompt based on the actual situation.

By optimizing with the above points, even smaller local large language models can play a greater role in video translation, reduce errors, improve translation quality, and bring you a better local AI experience.