Compatible AI and Local Large Models: Usage of Domestic AI Compatible with OpenAI ChatGPT Interface

In video translation and dubbing software, AI large models can serve as efficient translation channels, significantly improving translation quality by leveraging contextual understanding.

Currently, most domestic AI interfaces are compatible with OpenAI technology, so users can directly operate in the Compatible AI/Local Models channel. Alternatively, they can deploy and use ollama locally.

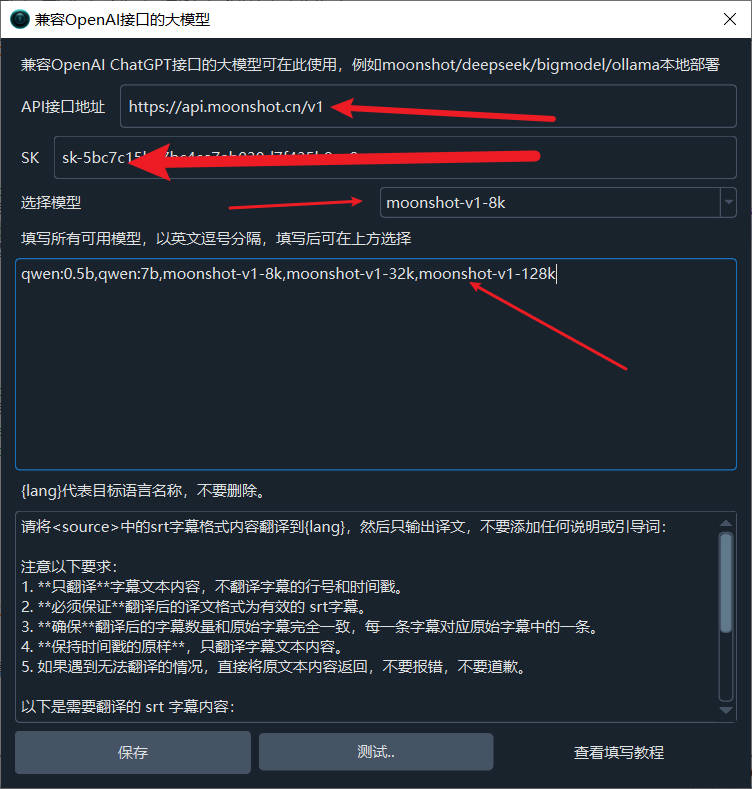

Using Moonshot AI

- Go to Menu Bar → Translation Settings → OpenAI ChatGPT API Settings interface.

- In the API endpoint field, enter

https://api.moonshot.cn/v1. - In the SK field, enter the

API Keyobtained from the Moonshot open platform, available at: https://platform.moonshot.cn/console/api-keys. - In the model text box, enter

moonshot-v1-8k,moonshot-v1-32k,moonshot-v1-128k. - Then select the desired model from the dropdown, test it to ensure no issues, and save.

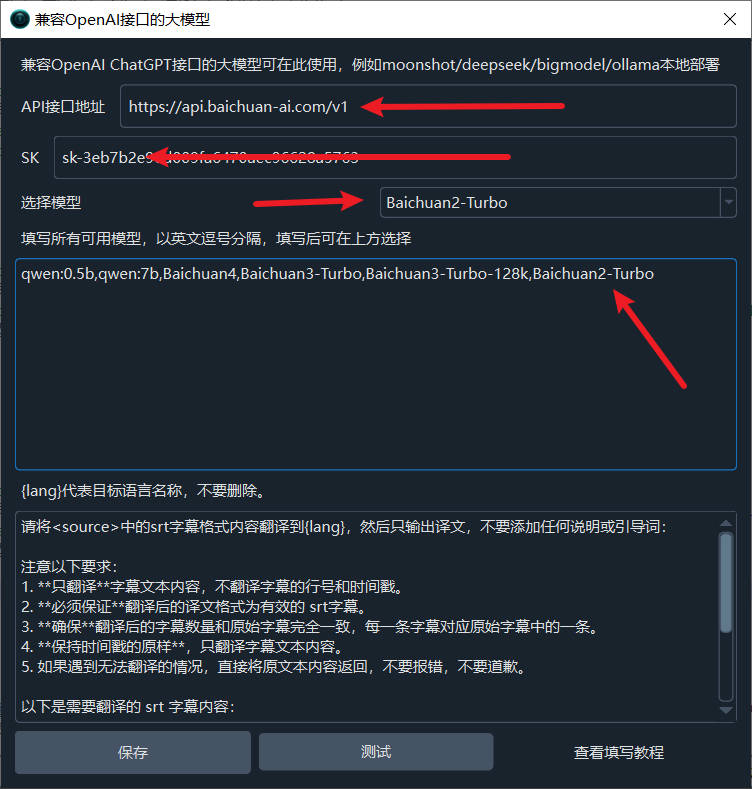

Using Baichuan AI

- Go to Menu Bar → Translation Settings → OpenAI ChatGPT API Settings interface.

- In the API endpoint field, enter

https://api.baichuan-ai.com/v1. - In the SK field, enter the

API Keyobtained from the Baichuan open platform, available at: https://platform.baichuan-ai.com/console/apikey. - In the model text box, enter

Baichuan4,Baichuan3-Turbo,Baichuan3-Turbo-128k,Baichuan2-Turbo. - Then select the desired model from the dropdown, test it to ensure no issues, and save.

Lingyi Wanwu

Official website: https://lingyiwanwu.com

API Key acquisition: https://platform.lingyiwanwu.com/apikeys

API URL: https://api.lingyiwanwu.com/v1

Available model: yi-lightning

Notes:

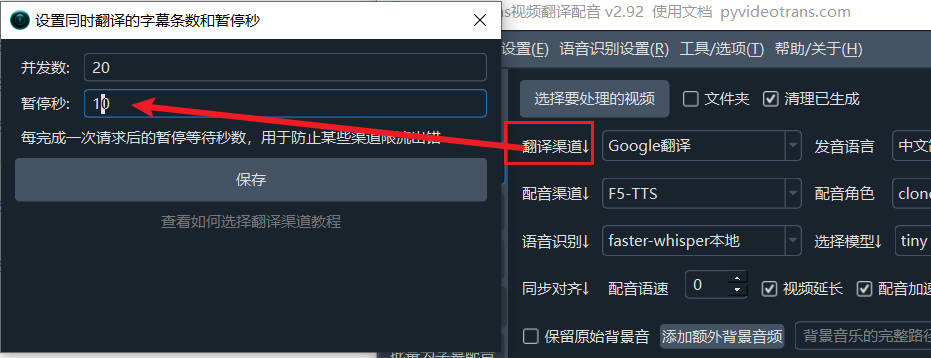

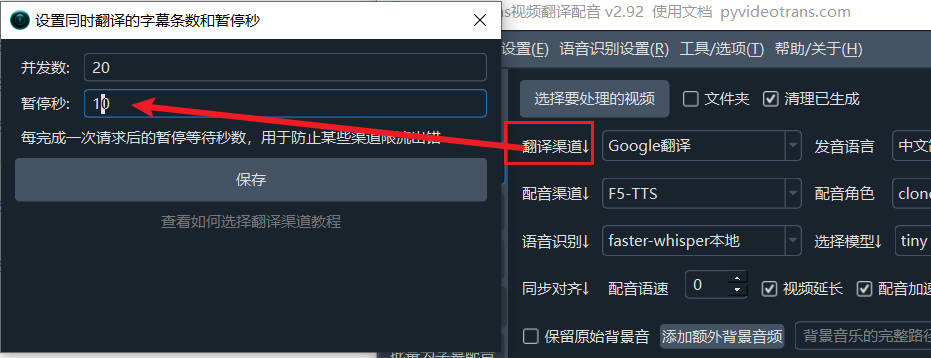

Most AI translation channels may limit requests per minute. If you encounter an error about exceeding the request frequency, click "Translation Channel↓" on the main interface, and in the pop-up, set the pause time to 10 seconds. This means waiting 10 seconds after each translation request, limiting to 6 requests per minute to prevent frequency limits.

If the selected model is not intelligent enough, especially for locally deployed models constrained by hardware (often smaller), it may not accurately follow instructions and return properly formatted translations, potentially resulting in excessive blank lines. Try using a larger model or go to Menu → Tools/Options → Advanced Options → deselect "Send full subtitle content when using AI translation."

Using Ollama to Deploy Tongyi Qianwen Model Locally

If you have some technical skills, you can deploy a large model locally and use it for translation. Here's how to deploy and use Tongyi Qianwen as an example.

1. Download the exe and Run It Successfully

Visit: https://ollama.com/download

Click to download. After downloading, double-click to open the installation interface and click Install to complete.

After completion, a black or blue window will automatically open. Type ollama run qwen and press Enter to automatically download the Tongyi Qianwen model.

Wait for the model download to finish; no proxy is needed, and the speed is fast.

Once the model is downloaded, it will run automatically. When the progress reaches 100% and "Success" is displayed, the model is running successfully, indicating the Tongyi Qianwen model is fully installed and ready to use. It's super simple!

The default API endpoint is http://localhost:11434

If the window closes, how to reopen it? It's easy: open the Start menu, find "Command Prompt" or "Windows PowerShell" (or press

Win + Q, type cmd to search), open it, and typeollama run qwen.

2. Use Directly in the Command Console

As shown below, when this interface appears, you can directly type text in the window to start using it.

3. If the Interface Isn't User-Friendly, Use a Friendly UI

Visit: https://chatboxai.app/zh and click to download.

After downloading, double-click and wait for the interface window to open automatically.

Click "Start Setup," in the pop-up layer, select "Ollama" under AI Model Provider, enter the API domain as http://localhost:11434, choose Qwen:latest from the model dropdown, and save.

After saving, the usage interface will appear. Use your imagination and enjoy!

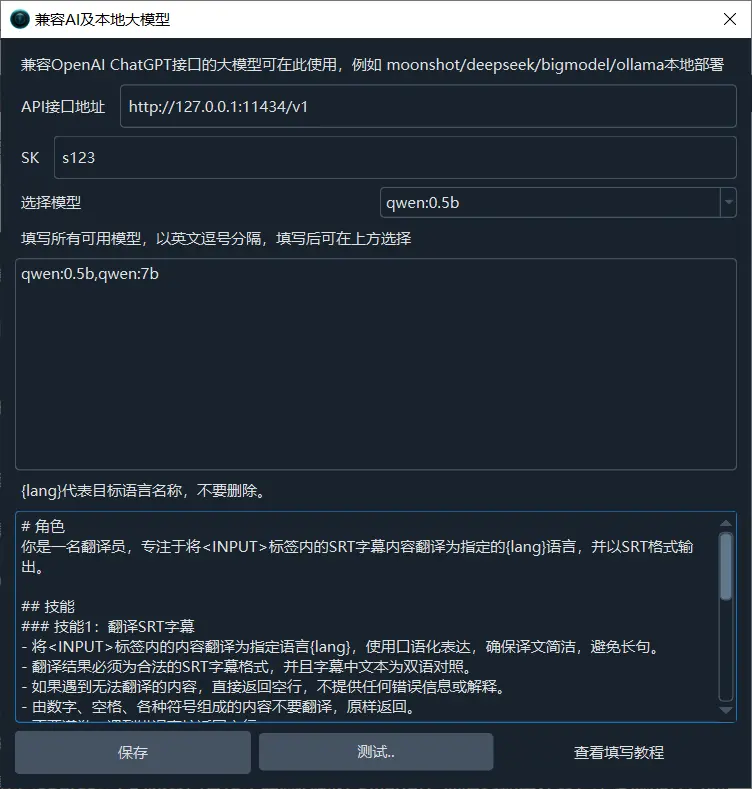

4. Enter the API in the Video Translation and Dubbing Software

- Open Menu → Settings → Compatible OpenAI and Local Large Models, add a model

,qwenin the middle text box. After adding, it should look like this, then select this model.

- In the API URL field, enter

http://localhost:11434/v1, and enter any value in SK, e.g., 1234.

- Test to see if it's successful, save if it works, and start using it.

5. Other Available Models

Besides Tongyi Qianwen, many other models are available. The usage is just as simple: only three words ollama run model_name.

Visit: https://ollama.com/library to see all model names. Copy the name of the model you want to use and run ollama run model_name.

Remember how to open the command window? Click Start menu, find "Command Prompt" or "Windows PowerShell."

For example, to install the openchat model:

Open "Command Prompt," type ollama run openchat, press Enter, and wait until "Success" is displayed.

Notes:

Most AI translation channels may limit requests per minute. If you encounter an error about exceeding the request frequency, click "Translation Channel↓" on the main interface, and in the pop-up, set the pause time to 10 seconds. This means waiting 10 seconds after each translation request, limiting to 6 requests per minute to prevent frequency limits.

If the selected model is not intelligent enough, especially for locally deployed models constrained by hardware (often smaller), it may not accurately follow instructions and return properly formatted translations, potentially resulting in excessive blank lines. Try using a larger model or go to Menu → Tools/Options → Advanced Options → deselect "Send full subtitle content when using AI translation."